Creating images is one of the most beloved and accessible applications of AI. When AI image models like DALLE (through ChatGPT) and Midjourney emerged, people quickly began experimenting, creating a flood of interesting and well, weird new art being created every day. It gave both designers and non-designers a simple tool to create “AI art” with nothing more than a simple text prompt and their imagination.

I’ve been planning to write a piece about AI image generation for a while now and I’m glad I waited. Recent advancements have significantly changed how image generation capabilities function within language models. Both the capabilities of these image generators and the way that they operate within LLMs have changed dramatically since 2021 when they gained traction.

As one example - check out the menu that I created for the AI Food Lab post below on the left vs the exact same prompted image just a couple of months later. Same prompt, very different result. AI images just became much easier to use and more useful for the average user.

So what exactly changed? And how can we utilize it? Let’s dive in.

Diffusion: The First Wave of AI Image Generation

Early AI image generators relied heavily on Diffusion Models. Trained on massive image datasets, these models worked similarly to language models – progressively refining random noise into coherent images based on text prompts. It was a breakthrough that produced high-quality and detailed images from text, and these models have gotten significantly better over time, as you can see below.

For several years, LLMs have leveraged image generators like OpenAI’s DALLE and Google’s Imagen to deliver photos to you directly through its chat portal. While impressive, it also had mixed results due to the relay between these two systems. This setup often led to imperfect results, as the sophisticated LLM would pass complex instructions to a simpler image generator, which frequently misinterpreted the prompts.

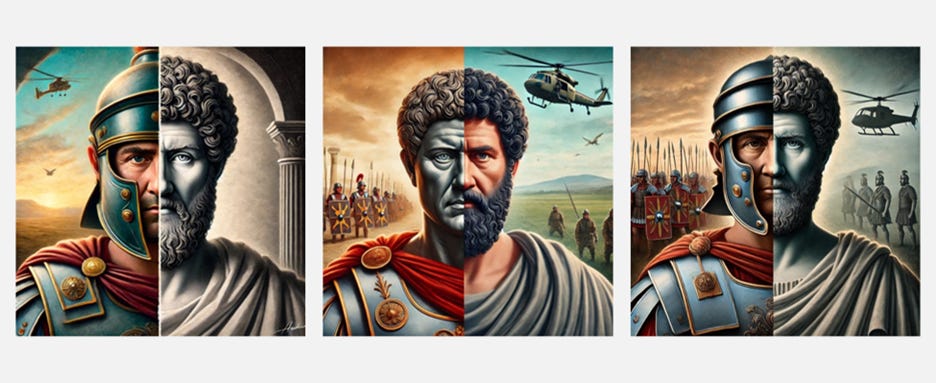

For instance, I kept asking GPT to omit helicopters (while writing this piece) but the image generator on the backend (DALLE 3) probably just kept hearing helicopters in the prompt, and continued to include it in new images every single time.

Furthermore, Diffusion images lacked the ability to edit images iteratively. Each prompt produced a fresh image from scratch, which made refining an image over multiple attempts difficult.

The Emergence of Multimodal Image Models

Recently, both Google and OpenAI (which has gotten all the attention) have announced new image generation capabilities directly within their multimodal LLM models. Unlike previous systems, these models now generate images directly instead of relying on a separate generator. This is a big change and a big deal!

The main takeaway, for me at least, is that it allows for conversational editing of images and is much better at generating images that meet precise requirements. (We’ll explore more about this below and per usual, Ethan Mollick has a great piece if you want to get in depth on this changes and their implications.)

To explore these new models a bit, I took some of the older pictures that I’ve used in this blog with a modern “multimodal” version that I created with the exact same prompt for each. Left uses ChatGPT through DALLE 3 and the right image uses the new multimodal ChatGPT Model 4o.

The two models certainly produce notable differences. While DALL·E images often appear layered or stitched together, and slightly disconnected, like layers of pictures were overlayed with one another. The newer model’s outputs are more cohesive and realistic, and also contain much more accurate text. However, I find the newer model’s precision sacrifices a bit of the creative chaos element that made it appealing, at least at times.

I mean, just look at how it handled my Roman battle scene prompt when I returned to it. It’s a drastic improvement.

Pricing

Both ChatGPT and Gemini offer multimodal image generation. While free plans provide limited image generation, paid plans ($20/mo) will give you full access to this feature with virtually unlimited usage (along with everything else they offer).

You can try out image generation but to really get the benefit you’ll have to pay up, and I increasingly think it’s a worthwhile investment.

AI Image Generation in Action

There are many fun and effective uses of AI image generation capabilities, directly from ChatGPT or Gemini, and they have expanded far beyond prompt-based art creation. Let’s explore a few.

Creating new images from text

This is the original use-case. One day we woke up and realized we could all create pictures of whatever we wanted based on a simple text prompt. People started putting out tons of fun and creative content into the world (as well as a bunch of weird stuff).

If you haven’t spent much time creating images just start prompting it with whatever comes to mind. I’m no expert but here are a few I made just playing around with it.

For inspiration, I encourage you to look at some of the cool AI art out there. There is so much cool art to check out and there are many AI Artists that are really creative. Here are a few places to check out:

https://lexica.art - An engine that allows users to generate and explore AI generated images that also surfaces the prompt of each.

https://www.reddit.com/r/aiArt - Reddit is a great place to share and explore with others.

https://www.nvidia.com/en-us/research/ai-art-gallery - Nvidia’s AI Art Showcase

https://www.artzone.ai/ - Content with prompts along with AI Artists to follow

There are many others. Any of these will give you some inspiration to get started and the prompts can really help you explore possibilities and hone in on your style.

Image editing and enhancement

You can now upload pictures and edit and enhance them. Let’s start with the apparent killer application that has taken the internet by storm: photo conversion. You can now apply just about any art style or theme to an uploaded picture.

OpenAI went viral the last couple weeks based on this X post.

Suddenly everyone started uploading their favorite photos in Studio Ghibli theme. There has been so much AI generated Ghibli on the internet in the last couple weeks… the ability to convert images into new styles is new and apparently has mass appeal (perhaps even more so than generating new AI art, interestingly).

Basically, upload any photo and ask it to edit it. You could convert the entire theme to anime or Legos or impressionism or… well just about anything. Anime is in right now.

It’s fun, try it out.

Styles to consider: As with most things AI, you’re limited by your imagination (and the tools censors, at times). You can come up with just about any style and ask AI for a picture (or to convert a picture) in that way. A few ideas to start with:

Changing pictures iteratively is where these new models really shine! What these styles lacked in the past is the ability to make iterative changes. Obviously, it’s not a tool like Photoshop where you can change every intricate detail exactly as you want, but AI can now generate new images with small changes correctly and consistently. Improve image quality if it’s a bit blurry. Remove or add objects in or out of the photo.

For instance, below is a logo that I created for our home bar Club Linus. Naturally our mascot features our cat Linus. I edited that initial version a few times based on simple text prompts. You can do these with any pictures, including real pictures from your camera that you upload, or generated images that AI creates for you.

Marketing Design and Branding

AI is a great tool for marketing and branding, especially if you’re a little flexible on format.

Speaking of Club Linus, let’s go ahead and create some more marketing material for our patrons. How about some drinkware with our new logo on it, a flyer for our annual tiki party, and our weekday happy hours. I’m open to how they look and the results after a single prompt are pretty fantastic.

It’s also a great tool for storyboarding and creative collaboration because it’s so quick and easy to use. While you probably aren’t going to use it if you need a very polished professional end-product, it’s perfect to mockup draft storyboards.

Sketching to Images

You can also turn sketches or outlines into fully generated images as well. This gives you a little more control in the final product as it provides a structure to the LLM (even if it’s a little rough). Here I asked it to create a flyer for me based on a very rough sketch and, while it doesn’t follow my instructions exactly, it led to a great outcome. (It can also convert and edit talented sketches and drawings as well.)

Design: Visualizing and prototyping

These tools are also powerful for design. They allow ideas to be drafted up and explored with little effort.

This user developed dress design visuals and placed them on an AI model. What’s most interesting, if you click the link and see all examples, is that AI kept the studio backgrounds consistent for each dress, which gives the whole thing a realistic feel.

This person used AI for a little interior design work. Just take a picture and ask it to give you some ideas. Have fun with it.

Tips and Tricks for Better AI Image Generation

It’s helpful to use AI to brainstorm and explore what’s possible. The biggest suggestion that I have to give AI an example prompt first and ask it for suggestions on how to make it more effective visually, along with offering to clarify any questions that it has.

I thought this recent Reddit post was a fun and helpful guide. It encourages thorough direction as the model doesn’t know what it doesn’t know – if you’re looking for something specific, it needs to be outlined clearly. Whether you use this person’s guide or not, spending a little extra time on the prompt for pictures goes a long way.

For this blog, I sometimes prompt pictures directly, but sometimes I ask ChatGPT for some creative ideas on how to visualize the post theme. I will then try a few out until I get one that I like, and then typically refine the prompt once or twice before going final.

Ethical and Legal Considerations

We are so early in AI and AI Image generation and there are many issues that still need to be ironed out. The most obvious relates to legality and copyright law. There are many pictures and styles that you can generate with these AI tools today that are expressly based on copyrighted IP (such as Muppets, Simpsons, South Park, and yes Ghibli).

Who gets credit? Who owns and profits from the results? All unanswered questions, and I fully expect the ability to create these types of copyrighted images to be disabled over time.

Conclusion

AI image generation is one of the most engaging and accessible creative applications of AI. With their integration into LLMs, the tools are becoming even more powerful and user-friendly. While challenges remain, the potential for creative expression and productivity is immense. Try it out and share your results below—have fun with it!